If you’re a web developer looking to understand or extend Microsoft 365 Copilot, you need to grasp how it actually works under the hood. Without this foundational knowledge, you’ll struggle to make informed decisions about when and how to customize it for your organization.

One of the first things I like to do before explaining the customization, extensibility, or development story for Microsoft 365 Copilot is cover how it works. From my webinars, presentations at conferences, and my Build Declarative Agents for Microsoft 365 Copilot workshop, I’ve found a solid understanding of how it works makes it much easier to understand what options are available to you.

So, let me walk you through the Microsoft 365 Copilot tech stack and explain what happens when you submit a prompt to Microsoft 365 Copilot.

The Copilot tech stack

By the way, When I refer to “Copilot” throughout this article, I mean Microsoft 365 Copilot specifically. Microsoft has over 100 different products with “Copilot” in the name (not really, well maybe, but you get the point), so let’s be clear about which one we’re discussing.

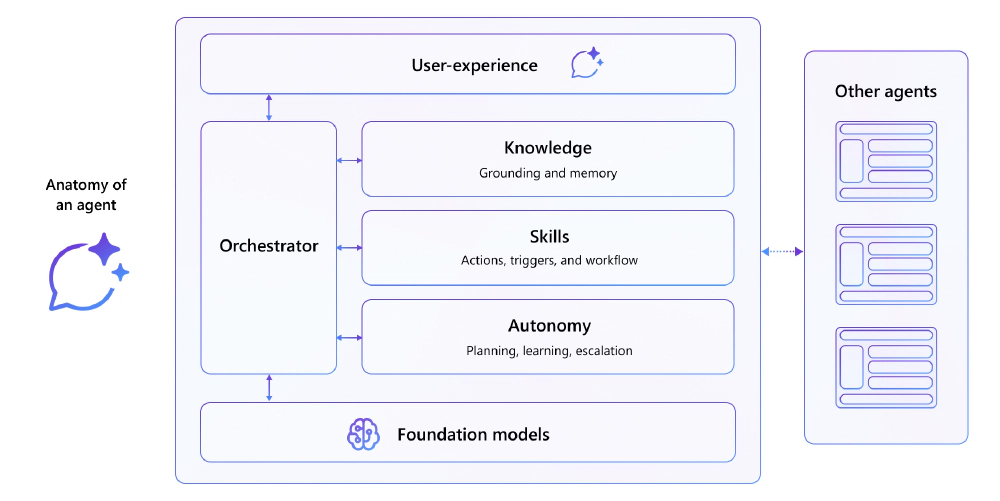

The Copilot tech stack consists of four main components:

- User Experience: This is where you interact with Copilot - through the chat experience in Microsoft Teams,

m365.cloud.microsoft, or within individual apps like Word, Excel, PowerPoint, and Outlook. - Orchestrator: Think of this as the brain that coordinates everything. It processes your prompt, determines what additional information is needed, fetches that data, and works with the language model to generate responses.

- Knowledge and Skills: This includes the semantic index (grounded in Microsoft Graph), various data sources, and extensibility options like connectors and actions.

- Foundational Model: The underlying Large Language Model (LLM) that Microsoft has chosen - currently models like GPT-4 or GPT-4o Mini.

Microsoft 365 Copilot tech stack

Real world example

To help explain how Microsoft 365 Copilot works, let me share a real-world example for how I’ve created a custom agent that I use in my Microsoft 365 tenant to provide context for each of the steps I’ll walk through.

Simplifying the Example for This Article

While my example is a custom agent, I’ll explain in an abstract way to focus just on how M365 Copilot works.

Later, in the section Where customization fits in, I’ll explain how a custom agent can take this experience to the next level.

I create and publish email courses, self-paced video courses, webinars, and workshops on various Microsoft 365 development topics including the SharePoint Framework (SPFx), Microsoft Teams app development, and Microsoft 365 Copilot.

Consider the following:

- None of this content exists natively live within my Microsoft 365 tenant, nor is it publicly accessible.

- The lesson transcripts only exist in my Notion instance (where I author them) and Voitanos Learn where they’re published.

- Each course’s syllabus exists in Voitanos Learn, where courses contain chapters which contain lessons. Lessons can be a mix of text, videos, and code samples.

- Students enroll in my courses and access the content primarily via their student accounts for Voitanos Learn.

With this scenario in mind, now we can explore how Copilot works.

What happens when you submit a prompt

Let’s look at how Copilot works. I’ll use my example scenario above to help articulate each part of the process.

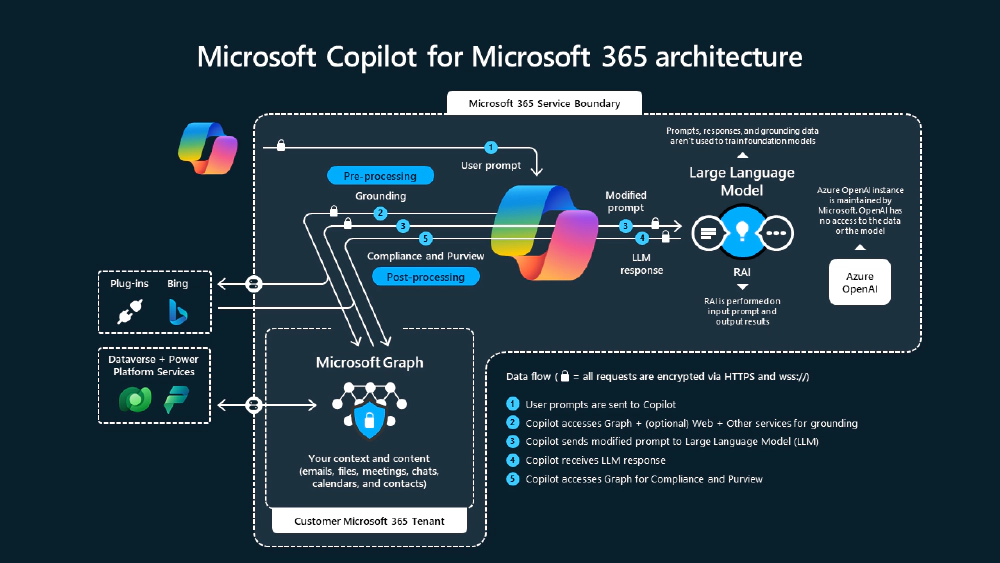

Here’s the step-by-step process that occurs when you send a message to Copilot:

Microsoft 365 Copilot - Under the Covers

Look familiar? This diagram is frequently used by Microsoft at events.

Step 1: Initial prompt processing

Your prompt goes to the orchestrator, which combines it with a system prompt. The system prompt - which you never see - contains the rules and guidelines that tell the LLM what it can and cannot do. This includes safety restrictions and behavioral guidelines among other things.

For my example scenario, let’s say the user submits the following prompt:

PROMPT: Where can I use SPFx web parts in Microsoft 365? Add guidance information on if these are good options or not, and what I should be careful of. Incorporate sources other than Microsoft’s documentation in your response.

The default system prompt will make sure the prompt meets the responsible AI rules defined by Microsoft 365 for M365 Copilot and what types of content it has access to.

But it won’t have any special context on my specific scenario.

Step 2: Intent analysis and planning

The orchestrator sends your prompt to the LLM with instructions to analyze what you’re asking for and create a plan for gathering additional information.

For example, if you ask “summarize my meetings for the next two days and any emails from those attendees in the last two weeks,” the LLM responds with a plan: “I need Andrew’s calendar for the next two days, then I need emails from all the people in those meetings from the last two weeks.”

For my example scenario…

The LLM’s action plan returned to the orchestrator will include questions about where SharePoint Framework web parts can be used.

Step 3: Data gathering

Based on the plan, the orchestrator fetches additional data to provide context for your prompt. This process is called “grounding the data.” The orchestrator might:

- Query the semantic index (more on this in a moment) for calendar and email data

- Search the semantic index for relevant documents

- Call external APIs through plugins or actions

- Retrieve data from Microsoft Dataverse or Microsoft Fabric

This isn’t a single round trip. The orchestrator may go back and forth multiple times, gathering data from different sources as needed.

For my example scenario…

The orchestrator will submit queries to the semantic index to find any content in my Microsoft 365 tenant about web parts as well as using the web search capability to find relevant content on the internet

But the orchestrator is limited to what it can find in my tenant’s index or on the internet - it won’t have any visibility into what’s in my Notion instance, in Voitanos Learn, or if a student asked the question.

Step 4: Responsible AI filtering

Throughout this process, Microsoft implements responsible AI checks on both incoming prompts and outgoing responses. The system evaluates content against four categories: hate speech, violence, self-harm, and sexual content. Each category has different severity levels that determine what’s allowed or blocked.

Step 5: Response generation

Once all the necessary data is gathered, the orchestrator sends your original prompt back to the foundational model along with all the additional context it collected. The LLM then generates a response based on both its foundational knowledge and additional context collected by the orchestrator.

For my example scenario…

The orchestrator will take what it found on the internet plus what limited information it found in my tenant’s semantic index and include it with with my original prompt. Now, the LLM has additional context to create the generative response.

But the orchestrator, and thus the LLM, didn’t get any valuable context on my course transcripts because they’re not in Microsoft 365.

Step 6: Final filtering and delivery

The generative response goes through one more responsible AI check before being delivered back to you through the user experience layer.

The semantic index: Copilot’s knowledge foundation

What’s this semantic index I mentioned above?

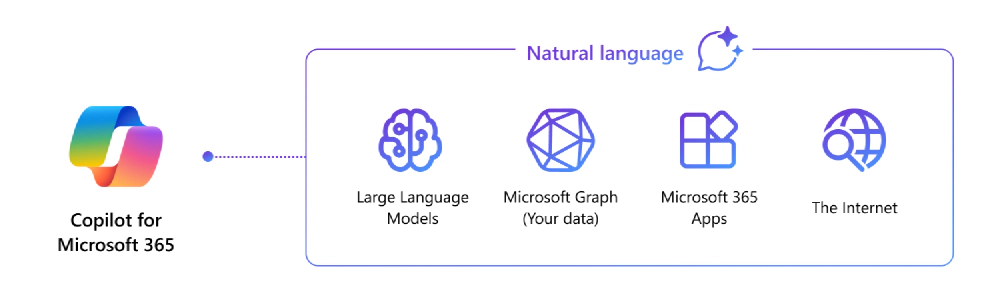

The semantic index is a critical part to the value Microsoft 365 Copilot adds beyond other public AI chatbot options, such as ChatGPT. It’s one of the most important components in this process.

If you’re familiar with SharePoint search, you understand keyword indexing - documents get indexed, and when you search, you’re matching keywords to find relevant content.

The semantic index works differently.

Microsoft 365 Copilot Knowledge Sources

Instead of keyword matching, it uses semantic relationships. If you search for “puppy,” it understands the relationship to “dog” is closer than to “cat,” and both are closer than to “automobile.”

Technically, the semantic index creates numerical representations (called embeddings) of text content and stores them in a vector database. When you submit a prompt, Copilot can perform semantic queries to find content that’s conceptually related to your request, not just keyword matches.

Microsoft creates this semantic index by indexing content from Microsoft Graph - your emails, documents, calendar items, and more. The security works just like SharePoint search: content in the semantic index maintains its original access control lists (ACLs), so Copilot will only see content that the current user has permission to access.

Where customization fits in

While you can’t control most of the Copilot stack - the orchestrator, the LLM, the LLM’s configuration, and responsible AI settings are all managed by Microsoft - you do have several extensibility options:

- Custom Instructions: Add additional context to the system prompt to influence how Copilot behaves and responds.

- Knowledge Sources: Extend Copilot’s knowledge beyond Microsoft Graph by ingesting content that doesn’t live in Microsoft 365 to be indexed and added to the semantic index.

- Actions and Tools: Teach Copilot new skills by connecting it to external APIs for real-time data access.

These customizations come together as agents - scoped, scenario-specific versions of Copilot that are experts at particular tasks.

Revisiting that example scenario

Let’s go back to my example scenario and see how a custom agent could improve the experience for the user. A custom agent could use all three of the customization options above in the following ways.

- Knowledge Sources: I could create a custom Copilot connector that ingests all transcripts from my courses into the semantic index, with each transcription containing the following metadata:

- Name of the lesson, chapter, and course

- Type of content in the transcript, such as “SPFx”, “web part”, etc

- URL to the lesson in the course in Voitanos Learn

- Actions and Tools: I could create a series of custom actions that use the REST API for the app I use to implement Voitanos Learn to find what courses the student has access to, if the person who asked the question is even a student, and use the REST API to find student discussions in our student-only forum.

- Custom Instructions: Finally, I could add additional instructions that are added to the system prompt that tell the LLM about the additional information it can retrieve and how it can use this information, such as:

- the transcripts in the semantic index

- specify it should always prioritize information from my custom copilot connector over content found on the internet

- include a link (aka: citation) to the lesson in Voitanos Learn

- which actions should be used to determine if the student has access to the course where an answer was found

- add an instruction that if the student doesn’t have access to the lesson where it’s found, it should recommend they enroll in the course

Now, when I submit that original prompt, as the owner of the courses, I’ll get a lot more context to the answer and which lesson in which course they can use to to learn more.

Or, better yet, I could submit an even more detailed prompt…

PROMPT: Where can SPFx web parts be used in Microsoft 365? Add guidance information on if these are good options or not, and what I should be careful of. Incorporate sources other than Microsoft’s documentation in your response. Contextualize your response for [email protected].

Now, the agent will realize there’s an email address and use one of the actions to figure out if they are a student of the course.

This is a much richer experience for the user that’s focused on a specific scenario!

Options for creating custom agents

Customers can create agents using a few different tools. There’s no one correct tool to use… each one is tailored for difference audiences, scenarios, and have varying advantages & disadvantages.

Agent Builder and Copilot Studio which are designer tools intended for makers, no/low-code developers, and are ideal for small groups and prototyping. The Microsoft 365 Agents Toolkit (ATK) for VS Code, formerly called the Teams Toolkit (TTK), is perfect for full-stack and pro-code developers who want to create robust enterprise agents at scale with more control.

These tools all have different capabilities and limitations.

Learn More About the Different Agent Options!

Want to learn more when you should choose Agent Builder over Copilot Studio or the Agents Toolkit? Join me in Atlanta, August 11-15, for TechCon 365!

I’m co-hosting a workshop with fellow MVP Mark Rackley that covers all the different options: Building Custom Agents for Microsoft 365 Copilot: Copilot Studio vs. Teams Toolkit.

What you can’t control (and alternatives)

The Microsoft 365 Copilot stack gives you a powerful, enterprise-ready AI experience, but with limited control over core components. You can’t change:

- Which foundational model is used or its configuration

- How the orchestrator works - to an extent… custom agents give you some control over the system prompt knowledge sources, and actions

- Responsible AI settings and filtering levels

- The core user experience

If you need more control over these aspects - perhaps you want to use a different LLM, implement custom orchestration logic, or have different content filtering requirements - Microsoft offers “custom engine agents.” These require you to build and host your own agent stack while still integrating with the Microsoft 365 user experience.

The bottom line

Understanding how Microsoft 365 Copilot works gives you the foundation to make smart decisions about when and how to extend it. The orchestrator coordinates a complex dance between user prompts, language models, and data sources to deliver contextual, grounded responses that respect your organization’s security boundaries.

While you can’t control every aspect of the stack, the extensibility options available through custom instructions, knowledge connectors, and actions provide plenty of room for customization without the complexity of building everything from scratch.

What questions do you have about how Copilot works?

Drop a comment below and let’s discuss how this foundation can help you build better solutions for your organization.

Microsoft MVP, Full-Stack Developer & Chief Course Artisan - Voitanos LLC.

Andrew Connell is a full stack developer who focuses on Microsoft Azure & Microsoft 365. He’s a 21-year recipient of Microsoft’s MVP award and has helped thousands of developers through the various courses he’s authored & taught. Whether it’s an introduction to the entire ecosystem, or a deep dive into a specific software, his resources, tools, and support help web developers become experts in the Microsoft 365 ecosystem, so they can become irreplaceable in their organization.