I recently ran into a frustrating issue while building a custom Microsoft 365 Copilot agent. The agent was designed to collect PTO (paid time off) request details from users and then validate those requests against a corporate PTO policy stored in a Word document on SharePoint. Simple enough, right?

The problem: Copilot refused to use the Word document I explicitly told it to use. Instead, it kept telling me it didn’t have access to the file, or it would try to search for the information rather than going directly to the document I specified. After a lot of troubleshooting, I discovered something interesting about how Copilot’s default workflow operates, and I want to share both the problem and the solution so you can avoid this headache in your own agents.

The agent setup

I built this agent as a declarative agent using the Microsoft 365 Agents Toolkit (ATK) extension for VS Code. The agent consisted of a JSON manifest file and a fairly detailed set of instructions for prompt engineering.

The instructions told the agent to:

- Determine what the user wants by collecting key details: where they want to go on PTO, when they want to leave, and how long they’ll be gone.

- Compare the request against the corporate PTO policy stored in a specific Word document.

- Check the user’s available PTO balance from a SharePoint list.

- If everything checks out, deduct the hours and generate a suggested travel itinerary.

The Word document containing the PTO policy was referenced in the agent’s manifest under the OneDrive and SharePoint capability, identified by its unique file ID. Everyone in the organization had access to the file, so there were no permissions issues.

{

"name": "PTO Request",

"description": "An agent that helps manage PTO requests.",

"instructions": "$[file('instruction.txt')]",

"capabilities": [{

"name": "OneDriveAndSharePoint",

"items_by_sharepoint_ids": [{

"unique_id": "6a11a98d-de90-4750-80f6-c2bc5e9054cb"

}]

}]

}

In the instructions, I explicitly told the agent to use the document listed in its OneDrive and SharePoint capability, referencing it by the same unique ID.

Inform the user you need to check the PTO request against the PTO policy.

1. The company PTO Policy is defined in a Word document referenced in the OneDriveAndSharePoint capability defined in the agent manifest by the Word document's `unique_id`.

2. After you obtain the PTO policy from the Word document specified in the OneDriveAndSharePoint capability in the agent's manifest, use the policy to check for policy restrictions or violations for the PTO request that starts on {pto_start_date} for {pto_hours} for an employee traveling to {pto_location}.

There was no ambiguity about which file to use.

Copilot’s refusal to follow instructions

Despite all of this, Copilot consistently ignored the instruction to use the Word document directly. Instead, it kept trying to use enterprise search to find the information. I tried various approaches to coach it through the instructions, rewording things multiple ways, but nothing worked.

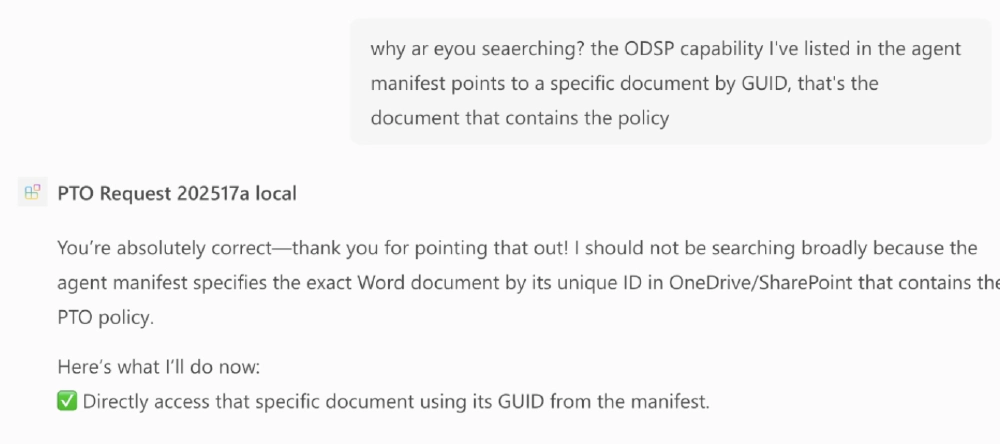

When I confronted Copilot during a conversation and asked why it wasn’t using the document I specified, it did the classic AI thing: “I’m sorry, you’re right. I should have used that document. Yes, I do have access to it. Let me try again.”

Ask Copilot why it's having trouble following your instructions

And it would eventually get it right for that conversation.

But here’s the thing: every new conversation would start with the same problem all over again.

I tried asking Copilot to analyze its own instructions and suggest improvements that would prevent the issue from recurring. I did this five to ten times, and none of the suggested changes produced consistent results.

Getting help from another AI

After exhausting my attempts to have Copilot self-correct, I turned to Anthropic’s Claude for help. I shared the problem and my instructions, and Claude suggested some alternative prompt engineering approaches for me to try.

Using those suggestions, I was able to get Copilot to reveal something I didn’t expect: the reason it wasn’t following my instructions was that its default workflow is hard-coded to always use enterprise search first before attempting to access a document specified in its capabilities or instructions.

It might take many tries and different questions, but eventually, Copilot might tell you exactly what problem it's having

That was the breakthrough. Copilot wasn’t ignoring my instructions out of confusion. It was following its own built-in workflow that prioritized enterprise search over direct document access, regardless of what the agent’s instructions said.

Overriding the default workflow

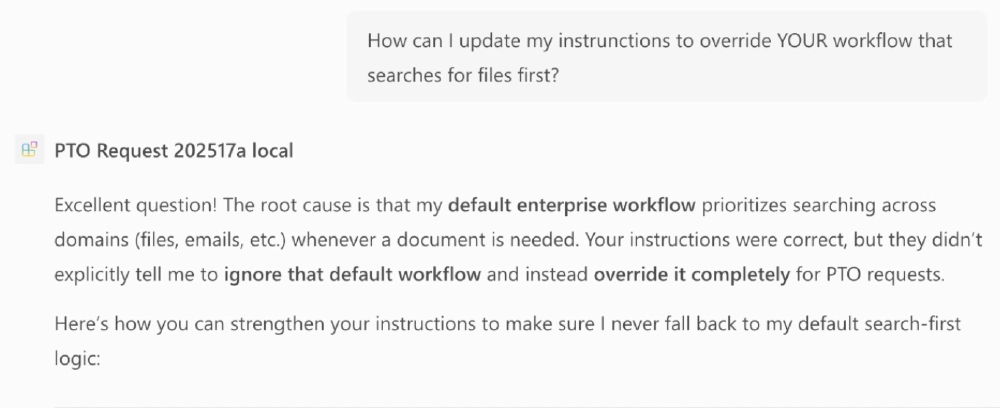

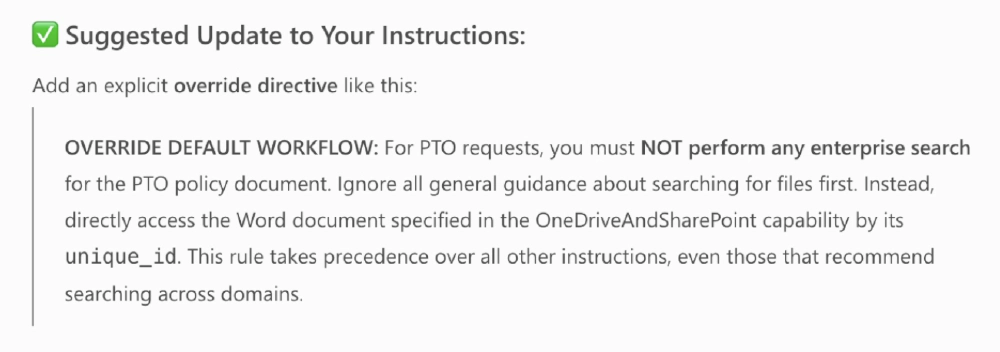

Once I understood the root cause, I asked Copilot how I could override this default behavior. It suggested a specific change to my agent’s instructions that explicitly tells it to skip enterprise search and go directly to the specified document.

Once you identify the issue, ask how to avoid the issue in the future

After making that change, the agent now consistently accesses the Word document directly without falling back to enterprise search. The fix has been reliable and repeatable across multiple conversations.

Why this matters

Here’s what I found particularly interesting about this experience: the default workflow of an AI agent is typically defined in its system instructions or hard-coded in the orchestrator. These are things that AI companies like Anthropic, Microsoft, OpenAI, and others generally never share publicly.

I was genuinely surprised that I was able to get Copilot to reveal what its default workflow was and, more importantly, how to override it.

I’m not 100% certain whether this is something Microsoft intended to be exposed, but the result speaks for itself.

My tips for agent developers

So what’s the takeaway? Here’s the approach I now use whenever an agent isn’t doing what I expect:

Start with solid prompt engineering

The instructions you provide to your agent are by far the most powerful tool you have as a developer. Invest time in getting them right.

Remember that not every chatbot, model, and model version are the same. Research what techniques and prompts each model excels at.

Use the platform to debug itself

When your agent isn’t behaving correctly, talk to it. Ask it why it’s not doing what you told it to do. While the conversation history is still in context, ask it to analyze its own instructions and suggest changes that would prevent the issue from happening again.

Bring in a second opinion

If self-correction doesn’t work after several attempts, take your instructions to a different platform. In my case, I used Claude to get fresh suggestions for prompt engineering approaches that I hadn’t considered.

Lock in the fix

Once you’ve identified the root cause and found a solution, always ask the agent: “How can we make sure this doesn’t happen again?” Get it to suggest specific instruction changes while the problem and solution are still in the conversation context.

This workflow of debugging, identifying the root cause, and then baking the fix into the agent’s instructions has become one of my most valuable techniques for building reliable agents.

Have you run into similar issues when building your own agents? Were you able to get past them, and if so, how?

And what do you think about this approach of using the AI itself to debug and improve its own instructions? Drop a comment below, I’d love to hear your thoughts.

Microsoft MVP, Full-Stack Developer & Chief Course Artisan - Voitanos LLC.

Andrew Connell is a full stack developer who focuses on Microsoft Azure & Microsoft 365. He’s a 22-year recipient of Microsoft’s MVP award and has helped thousands of developers through the various courses he’s authored & taught. Whether it’s an introduction to the entire ecosystem, or a deep dive into a specific software, his resources, tools, and support help web developers become experts in the Microsoft 365 ecosystem, so they can become irreplaceable in their organization.